AI-Powered Processing of 500+ Page Bid Packs at Scale (1/4)

Big organizations drown in documents: 500-plus-page PDFs, scanned annexes, tables, and forms that arrive not once, but all the time. Shoving entire files into an LLM is slow, expensive, and hard to defend. This post shows a better way with AI: extract a small, testable catalog of requirements, index the documents locally, retrieve only the few passages that matter, and demand verbatim, page-linked evidence.

We use procurement as the running example, but the same pattern applies anywhere you process large volumes of pages: vendor risk and security due diligence, contract and policy review, healthcare and regulatory dossiers, M&A data rooms, insurance claims, ESG reports, and more.

AI-Powered Processing of 500+ Page Bid Packs at Scale (1/4)

Procurement in a large organization or in the government isn't a grand tender once a year; it's a conveyor belt that never stops. At any point there are ten or more live events in flight, each inviting five, ten, sometimes twenty submissions, or more. Every “bid pack” is thick: administrative forms and signatures, bank guarantees, insurance certificates, ISO/IEC paperwork, security questionnaires, price schedules, annexes, appendices, and the occasional scanned test report. A single submission of 300–800 pages is normal; crossing 1,000 once you count annexes isn't rare. And when you finish the pile on your desk, another pile lands.

It's tempting to toss the whole PDF into a large language model and ask for a compliance matrix. That works in a demo. In production it's slow, expensive, and fragile. You pay for every token whether it matters or not. You collide with requests-per-minute and tokens-per-minute limits the moment deadlines tighten. Worst of all, you still don't get what audit actually needs: a decision you can defend, with a verbatim quote and the exact page it came from.

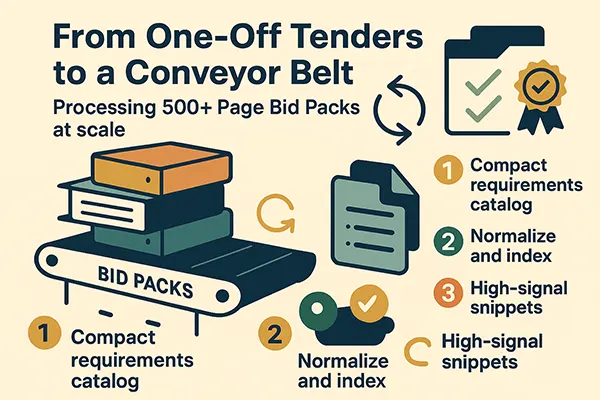

This article is about a durable way to run continuous bid reviews under real quotas. The shape is simple and repeatable:

- extract the RFP into a compact requirements catalog once,

- normalize and index each incoming bid pack locally, and

- per requirement send only two to four short high-signal snippets to the model with a strict must-cite rule.

No whole-document prompts. No guessing. If the model can't copy a short quote from the provided text and tell you the page, the answer is Missing, and you escalate just that requirement.

We'll show the token math that keeps Finance comfortable, the packing and triage that stay inside Azure/OpenAI rate limits, and the validation that keeps Legal happy. The goal isn't a hero run; it's a pipeline you can run every week of the year.

Can't I Just Use Agents?

Short answer: not for this. There's no “press one button and it reads 500 pages perfectly” agent. Frameworks can orchestrate tools and state, but they don't magically solve retrieval, snippet selection, packing, or rate-limit math. You still need the sequence we outlined.

What helps (but doesn't replace the pipeline):

- Azure/OpenAI Assistants (“agents”) - Great at tool-calling and long-running tasks. You still have to provide the retrieval/chunking step and clear rules for packing multiple requirements per call.

- LlamaIndex - Solid ingestion pipelines (chunkers, post-processors, rerankers). You still choose chunk size/overlap, fusion (e.g., RRF), diversity (MMR), and enforce must-cite prompts.

- LangChain / Semantic Kernel - Useful orchestration glue (retrievers, rerankers, throttling). You still own evidence guarantees, strict JSON schemas, and your Azure RPM/TPM budget.

Bottom line: agents are the runner, not the race plan. The plan is still: catalog -> index -> retrieve -> pack -> must-cite -> verify.

Not Just for Procurement

This isn't a one-trick play. The same rules flow works anywhere you face lots of pages and need evidence-cited decisions. Swap the “requirements catalog” for whatever makes sense in that domain (a control checklist, policy rules, question bank), and you're good to go.

Use cases that fit like a glove: vendor risk/security due diligence (SIG/CAIQ, SOC 2, ISO 27001 SoA), legal/contract review (clause checks, deviations lists), M&A and data-room triage, healthcare & life-sciences (ISO 13485, CE/FDA dossiers, sterilization/test reports), regulatory/compliance filings (GDPR/PCI/GLBA controls), insurance (policy wording, endorsements, claims packs), construction & engineering (method statements, HSE plans, BoQs), IT managed services (SLAs, DPAs, security appendices), ESG reporting (supply-chain attestations), and grants & academia (proposal compliance, annexes, ethics).

Same mechanics, same guarantees: send only the few high-signal passages per rule, demand a verbatim quote with page numbers, and keep a paper trail your auditors (or reviewers) can trust.

Why “just send the whole document” isn't feasible

Let's get concrete. A 1000-page PDF is easily 400K–600K tokens of text once you normalize it (line wraps, hyphenation fixes, text extracted from tables, page numbers). Even if you find a model with a context window large enough to accept it, you run into several brick walls:

- Cost and rate limits. You pay for every input token and output token. Many cloud providers also limit tokens per minute and requests per minute. Pushing hundreds of thousands of tokens for a single bidder throttles your entire pipeline.

- Latency and throughput. Huge prompts serialize your work. If you wait minutes per bidder for a single pass, you cannot iterate, and you cannot compare ten bidders in the same afternoon.

- Reliability. You're asking the model to “remember” the entire book and then find needles inside it. That is when hallucinations creep in and you get vague answers without citations. No audit team will sign off on that.

- Auditability. Procurement lives and dies by traceability. If your compliance rows don't have page numbers and quotes, you end up re-reading the document anyway.

Let's check this with a math. Let:

- be the number of tokens in a single bidder document after extraction.

- be the number of rules you must check (the requirements you derive from the RFP).

- be the average number of tokens in your prompt instructions and fixed scaffolding.

- be the average number of output tokens per result.

The “send it all” approach typically looks like this:

- Single giant call per bidder: one pass to decide all rules at once. Tokens per bidder .

- Per-rule calls (worse): if you ask separately for each rule while still sending the full document every time, .

With (very typical for 1000 pages), even the single-call version is not realistic for most models and deployments. If you split the document into big sections and send multiple giant calls, you're just summing huge numbers: anyway, plus overhead each time.

So what looks feasible? You don't need the entire haystack for each rule - just the few sentences or table rows that actually prove compliance. The goal is to make disappear from the equation you send to the model.

The solution that works: rules first, snippets second, citations always

Here's the plan in plain language. First, turn the RFP into a short, testable catalog of rules. Do this once. Then read the bidder's pack locally, clean it, and split it into small overlapping windows (“chunks”) that keep page numbers and table rows intact. Build a simple local search index over those chunks. For each rule, use a lightweight retrieval step to pick two to four short passages - ideally from different pages or sections - so together they have everything the model needs to make a call. Then ask the model for a verdict with a must-cite contract: the answer must include a short verbatim quote and page numbers from the provided passages, or it must say “Missing.” Finally, verify the quote locally (string match), score the result, and move on. No full document ever goes to the model.

When you do this, the token math changes completely. Let:

- be the number of passages (snippets) you send per rule (usually 2–4).

- be the size in tokens of each passage (we'll keep them around 180–240 tokens).

- be the size of the rule text itself (a couple of dozen tokens when written concisely).

Then the tokens per rule become:

and the tokens per bidder become:

Even better, you don't need to make one call per rule. You can pack several rules into a single request to stay well under request-per-minute caps. Suppose you pack rules per request (for example, 5 rules at a time). The total tokens don't change, but your requests do: calls instead of calls.

Let's plug in realistic numbers....for example, we take 250K tokens for roughly 500 pages document:

- rules (a medium size catalog).

- passages per rule.

- tokens per passage (sentence-level, not a full page).

- tokens (short, testable rule).

- tokens (concise instructions and the JSON schema).

- tokens per rule (small JSON verdict with quote and pages).

Then per rule:

and per bidder:

Round it to seventy or eighty thousand to taste; it will depend on your exact prompt and output formatting. That is 3.2 less than sending the full 250k tokens, and it produces structured, citeable results. Most importantly: you never asked the model to “remember” a book; you only asked it to judge a paragraph or two for each rule.

“But won't I miss something important by not sending the full thing?” That's the right question to ask, and the answer is no if you do two simple things: overlap your local windows so boundary sentences aren't cut in half, and retrieve broadly before you select the final 2–4 passages. In other words, make the selection step generous and the model step minimal.

Now let's see how we can do this.

Step 1: build the RULE CATALOG once

The rule catalog is just a compact list of things you can test, and it contains all the requirements and provisions from the RFP. You want each item to be short enough to fit comfortably in a prompt next to two or three snippets, and concrete enough that a person could say “yes,” “partial,” or “no” by reading a single paragraph or table row. That means no fluff like “we prefer robust partners.” Instead, “bid security: 2% of total price, validity at least 90 days (Clause 3.2).” You can extract this catalog one time with a small model call, or you can code a deterministic parser if your RFPs are structured. The important part is that you lock it as version 1 and reuse it for all bidders.

You never send the entire RFP to the model again!

A minimal C# data object might look like this:

public record Requirement(

string Id, // "R12"

string Type, // "Admin" | "Technical" | "Commercial"

bool Mandatory, // true if failure disqualifies

string Text, // "Bid security 2% of total price; validity >= 90 days"

string? ClauseRef // "3.2" or "Section III, 3.2"

);

public record Catalog(string RfpId, int Version, List<Requirement> Requirements);

You store the catalog with a hash of the RFP text. If the RFP changes, you create version 2.

Step 2: normalize and chunk the document locally

You never send the raw PDF to the model. You read it locally once, clean it up, and split it into small overlapping windows. Keep page numbers and headings as metadata. Keep table rows intact. Fix line wraps and hyphenation so phrases don't split in the middle of words. A few reasonable defaults make this work out of the box:

- target window size around 180–240 tokens (say 700–1,000 characters),

- overlap around 30–35 percent (so a sentence at the end of one window will also be at the start of the next),

- never split a bullet point or a table row across windows,

- record page, headingPath, and a boolean flag like IsTableRow.

The chunker itself can be very simple. Here is a deliberately small routine to illustrate the idea. It assumes you've already extracted text per page and that you can identify sentence boundaries and table rows - use your favorite libraries for that part.

We prefer using the Azure AI Document Intelligence for this, which can: extract text from pdf and images, identify and extract tables with rows and columns, detect document layout and structure, handle forms and key-value pairs, and understand document semantics.

Now the chunker:

public sealed record Chunk(

string Id, // "p12_c03"

int Page,

string HeadingPath, // "e.g. Section 3. Bid Security"

string Text,

bool IsTableRow

);

public static IEnumerable<Chunk> BuildChunks(

IEnumerable<(int Page, string HeadingPath, string Text, bool IsTableRow)> blocks,

int targetTokens = 200,

int maxTokens = 260,

double overlap = 0.35)

{

List<Chunk> result = new();

List<string> buffer = new();

int page = 0;

string heading = "";

int counter = 0;

int TokenCount(string s) => (int)Math.Round(s.Length / 4.0); // rough estimate, use TikToken or something in the real-life code

foreach (var block in blocks)

{

page = block.Page;

heading = block.HeadingPath;

var sentences = SplitIntoSentences(block.Text);

foreach (var s in sentences)

{

if (buffer.Count == 0) counter++;

buffer.Add(s);

var joined = string.Join(" ", buffer);

if (TokenCount(joined) >= targetTokens || block.IsTableRow)

{

var trimmed = TrimToMaxTokens(joined, maxTokens);

result.Add(new Chunk(

Id: $"p{page}_c{counter:D2}",

Page: page,

HeadingPath: heading,

Text: trimmed,

IsTableRow: block.IsTableRow));

// start next chunk with overlap

int keepChars = (int)Math.Round(trimmed.Length * overlap);

var overlapTail = trimmed[^keepChars..];

buffer = new List<string> { overlapTail };

}

}

}

if (buffer.Count > 0)

{

result.Add(new Chunk(

Id: $"p{page}_c{++counter:D2}",

Page: page,

HeadingPath: heading,

Text: string.Join(" ", buffer),

IsTableRow: false));

}

return result;

}

Is this perfect? No, mostly to illustrate the point. Is it good enough to keep phrases together and tables intact so your downstream search can find them? Yes. The important part is that overlap lives only in your local store. You are not sending every chunk to the model.

Step 3: index and tag once (use BM25, not a neural network retriever)

Once you have chunks, build a small, classical index. You don't need a neural retriever for this job; BM25 (the ranking formula behind many search engines) loves numbers and acronyms and does a very decent job on legal language. On top of that, run a tiny sweep once per chunk to tag things that are obviously relevant to many rules: money amounts and currencies, percentages, days, ISO or EN codes, words like “bond”, “security”, “guarantee”, “validity”, “shall”, and “must”, and most importantly, table rows where price schedules live.

Here's a tiny tagger:

public static class Tags

{

public static readonly Regex Money = new(@"(?i)(€|\$|USD|EUR|GBP|BAM|KM)\s?\d[\d\.\, ]*", RegexOptions.Compiled);

public static readonly Regex Percent = new(@"(?<!\d)(\d{1,3})\s?%", RegexOptions.Compiled);

public static readonly Regex Days = new(@"(?i)\b\d{1,4}\s+(calendar|working)?\s*days?\b", RegexOptions.Compiled);

public static readonly Regex Bond = new(@"(?i)\b(bid|performance)\s+(bond|security|guarantee)\b", RegexOptions.Compiled);

public static readonly Regex Iso = new(@"(?i)\bISO\s?\d{3,5}(:\d{4})?\b|\bEN\s?\d+\b", RegexOptions.Compiled);

public static readonly Regex Must = new(@"(?i)\b(shall|must|required|grounds for rejection)\b", RegexOptions.Compiled);

}

public static HashSet<string> TagChunk(Chunk c)

{

var t = new HashSet<string>();

if (Tags.Money.IsMatch(c.Text)) t.Add("money");

if (Tags.Percent.IsMatch(c.Text)) t.Add("percent");

if (Tags.Days.IsMatch(c.Text)) t.Add("days");

if (Tags.Bond.IsMatch(c.Text)) t.Add("bond");

if (Tags.Iso.IsMatch(c.Text)) t.Add("iso");

if (Tags.Must.IsMatch(c.Text)) t.Add("must");

if (c.IsTableRow) t.Add("table");

return t;

}

You can improve this tagger to your hearts content. We used the HashSet because it is a primitive structure and extremely fast, as this will only grow, but you can use other structs as well.

Step 4: choose the two to four passages per rule

This is the heart of the system and also the part that architects usually overcomplicate. The selection step can be simple and still very strong: search widely, merge with tags, rerank for “evidence quality”, and force diversity so your final two or three passages aren not near-duplicates.

A straightforward recipe looks like this:

- Build a query from the rule text. Pull sentry terms like numbers and standards: “2%”, “90 days”, “ISO 9001”, “bid bond”, “validity”. Add a few synonyms if needed (“bond/security/guarantee”, “two percent”, etc.).

- Ask the BM25 index for the top 30 chunks for that query.

- Union that list with tagged candidates that match the rule's type - if the rule mentions “bond”, pull every chunk that carries the bond tag; if it's a price rule, include table.

- Give each candidate a simple score: the BM25 rank turned into a score (higher is better), plus small boosts for the presence of all sentry terms, plus a boost if the chunk comes from the right section (headingPath contains “Bond” or “Security”), plus a bonus if the chunk is a table row when the rule is numeric, minus a small penalty if the chunk is overly long.

- Take the top passage, then apply a diversity filter: prefer the next passage to be from a different page or section, or a different format (table vs narrative). The formal name is the Maximal Marginal Relevance, but you can also think of it as “avoid same page and same heading if possible”.

- Stop after 2–4 passages. If the rule is mandatory and you don't see the key number or unit in any selected chunk, broaden the search (synonyms, nearby sections, table priority) and try again for that rule only.

Here is a deliberately short “skeleton” of the selection step (the BM25 index is abstracted as ISearchIndex):

public sealed record Candidate(Chunk Chunk, double Score);

public IEnumerable<Chunk> SelectPassages(

Requirement rule,

ISearchIndex index,

IReadOnlyList<Chunk> allChunks,

int k = 3)

{

var query = ExpandQuery(rule.Text); // add synonyms/variants

var top = index.Search(query, 30); // returns (Chunk, rank)

var tagged = allChunks

.Where(c => ShouldConsiderByTag(rule, TagChunk(c)))

.Distinct();

var fused = Fuse(top.Select(t => t.Chunk).Concat(tagged));

var reranked = fused

.Select(c => new Candidate(c, Score(rule, c)))

.OrderByDescending(x => x.Score)

.ToList();

var chosen = new List<Chunk>();

foreach (var cand in reranked)

{

if (chosen.Count == 0) { chosen.Add(cand.Chunk); continue; }

if (IsDiverse(cand.Chunk, chosen)) chosen.Add(cand.Chunk);

if (chosen.Count >= k) break;

}

return chosen;

}

This is intentionally plain: it's more important that you always include a table row for price or quantity rules and always ensure passages come from different pages or headings, than it is to fight over a fancy ranking function.

Step 5: ask the model in small, packed requests

You now have, for each rule, a tiny requirement text and two or three short passages with page numbers and headings. Instead of making one request per rule, pack five rules into a single call to reduce request-per-minute pressure. The prompt for each rule follows the same shape:

- here is the rule,

- here are the passages

now return:

- strict JSON with status (Pass, Partial, Fail, or Missing),

- a short rationale,

- a verbatim quote, and

- the page numbers.

The crucial instruction is the must-cite contract: if a verbatim quote cannot be copied from one of the provided passages, the correct answer is Missing. That is your guardrail against “creative” answers. You also keep the output small on purpose; this is a classification task with evidence, not a story telling.

A simplified payload for a single rule might look like this when you assemble the request:

{

"requirement": { "id": "R12", "text": "Bid security: 2% of total price; validity >= 90 days (Clause 3.2)", "mandatory": true },

"passages": [

{ "page": 47, "heading": "Section III > 3.2 Bid Security", "text": "The bid security shall be two percent (2%) of the total bid price and shall remain valid for at least ninety (90) days after bid validity expires." },

{ "page": 112, "heading": "Annex G > Forms", "text": "Form BS-1: Bank Guarantee ... amount: 2% of total price; validity: 90 days ..." }

],

"return_schema": {

"status": ["Pass","Partial","Fail","Missing"],

"rationale": "string <= 80 words",

"evidence_quote": "string <= 40 words (must be verbatim from a passage)",

"page_refs": "array<int>",

"risk_level": ["Low","Medium","High"]

}

}

The complete request contains five such entries side by side and asks for an array of results. Keep the model temperature low and the instructions concise.

Step 6: verify locally and score

When you get the JSON back, you don't trust it blindly. You check that the evidence_quote appears verbatim in one of the passages you sent (simple string match) and that the page numbers exist. If a mandatory rule comes back Missing or fails verification, you can run a second retrieval variant for that rule only (for example, widen the search across nearby sections or prefer tables). Most of the time, you won't need this fallback.

Finally, compute the overall gate: if any mandatory rule is Fail or Missing, the bidder is non-responsive. Otherwise, assign a score (for example, Pass = 1, Partial = 0.5) and generate the compliance matrix with links that jump to the exact pages. That's what reviewers want to see.

The “will we lose information?” question answered

Two risks keep people awake: boundary loss (the one sentence that mattered was cut across two windows) and retrieval miss (the one place where the number appears didn't make it into the final 2–4 passages).

Boundary loss is handled by overlap. If you keep around 30–35 percent overlap, any sentence that lands at the end of one chunk is repeated at the beginning of the next. In other words, the probability that a sentence appears in no chunk goes to zero unless your chunker is broken. Retrieval miss is handled by generous candidate generation and diversity. You don't jump directly to two passages; you first build a wide candidate set by uniting BM25 hits with tagged chunks, then you rerank and choose diverse pages or sections. If the rule is mandatory and the key term still isn't present, you escalate from 3 to 5 for that rule only, or you run a synonym variant. The math is simple: your model token budget depends on and ; increasing by two for a handful of rules increases the total by a few thousand tokens, not hundreds of thousands.

If you like a tidy equation, define recall@K as the fraction of human-identified evidence passages that appear somewhere in your fused candidate list of size (not the final 2–4 you send). The pipeline is healthy when recall@ for mandatory rules sits above 95%. You can measure this on a few labeled tenders and lock it. The diversity filter then chooses the top 2–4 distinct passages from that reliable pool. Together, they almost always contain the exact phrase and numbers the model must quote.

Please note that this recall is not the same recall from the machine learning in the classification tasks. This recall is from the Information Retrieval, and is calculated as:

It essentially asks: "Of all the evidence passages that actually matter, how many are in my candidate list?".

Pulling it together

Let's finish where we began. The reason “send the whole document” fails is not that models are dumb; it's because the problem is mis-framed. You don't want an all-knowing summary machine. You want a careful auditor that answers this rule with this quote from this page. You get there by doing the heavy lifting locally (once), and by asking the model to do small, repeatable judgments with a must-cite contract.

The result is a pipeline that's fast enough to keep up with deadlines, cheap enough to run across ten bidders, and boring enough that an auditor will actually trust it. The numbers back it up: a 500-page pack that would explode your token budget becomes roughly sixteen small, packed requests totaling around sixty-to-eighty thousand tokens. You stay comfortably under request-per-minute and tokens-per-minute limits, and you ship a compliance matrix with evidence that your team can stand behind.

In the next post, we'll go deeper on snippet selection and how to fuse search with rule-based tagging, how to score “evidence quality,” and how to force diversity so you do not send three near-identical paragraphs. After that, we'll cover rate-limit strategy with packing and triage, and finally the metrics that convince legal and audit this isn't a toy. For now, if you take one thing away, let it be this: you don't need to feed the haystack to find the needle. You just need to keep the haystack in your own barn, and ask the model to look at the two or three straws that actually matter.

Discussion Board Coming Soon

We're building a discussion board where you can share your thoughts and connect with other readers. Stay tuned!

Ready for CTO-level Leadership Without a Full-time Hire?

Let's discuss how Fractional CTO support can align your technology, roadmap, and team with the business, unblock delivery, and give you a clear path for the next 12 to 18 months.

Or reach us at: info@sharplogica.com